For my final project I have two ideas. I should probably stick to one but what the heck.

Idea no. uno!

Have you ever thought about how the future affects the present? Hmmmmm. What??? What if events happening in the future could ripple their consequences into today... I am afraid that if I keep going deeper into this subject I might start making terrible youtube videos about how the government is traveling through time to stop free thinking, free energy, elect trump and hide extraterrestrial evidence from the rest of america.

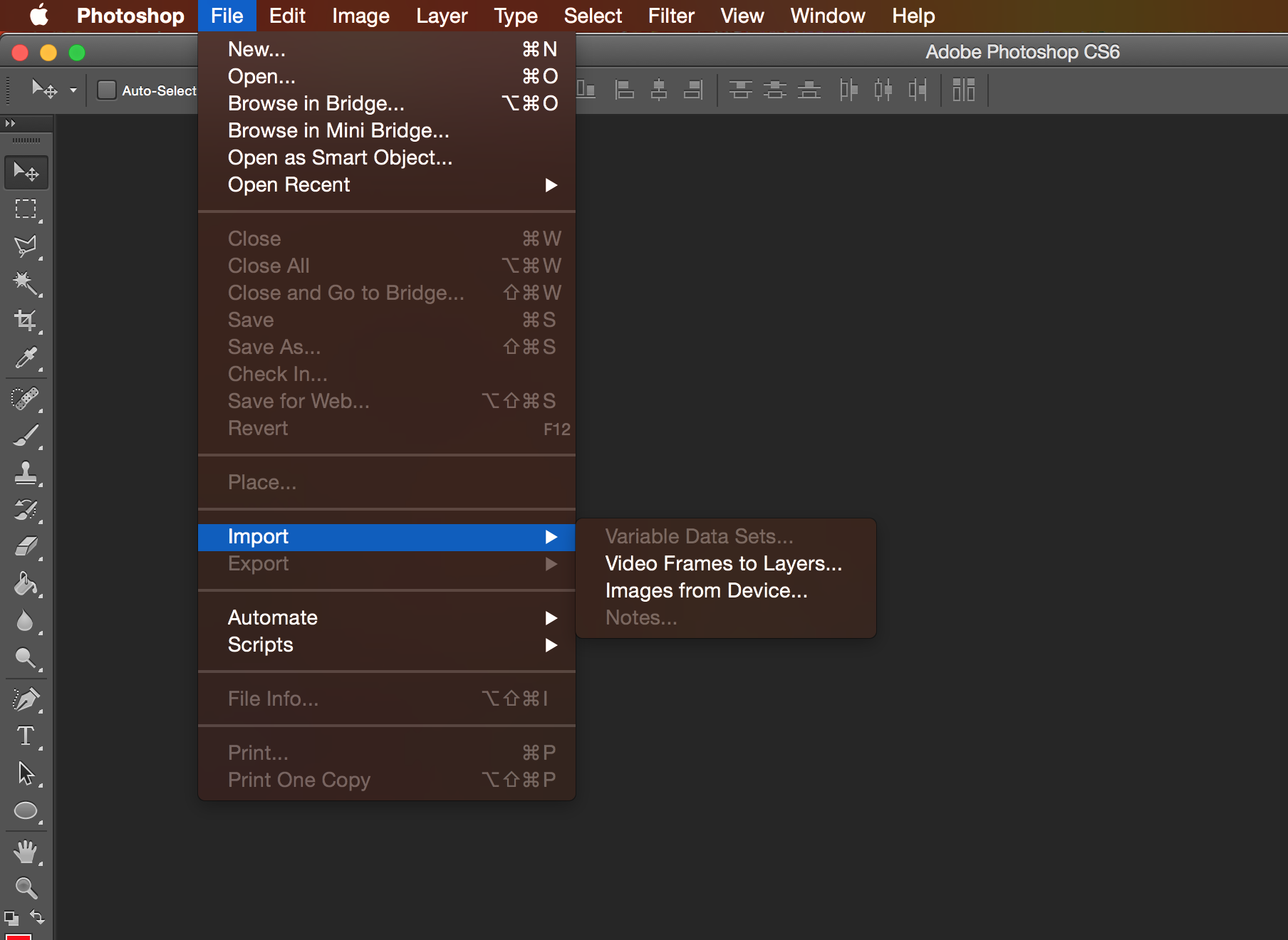

Anyways. How would I make this into a chrome extension? in a week... "a week", I guess time has a different meaning if you can move freely through it. Ahhhh! Back to chrome extensions.

Spoiler alert: [I am not really sending messages from the future.]

What if searches from the future could show their results today? What if one day you open a new tab and see this:

Weeks later, long after you have completely forgotten about that Sri Lankan politician, you open a new tab only to be redirected to a wikipedia page:

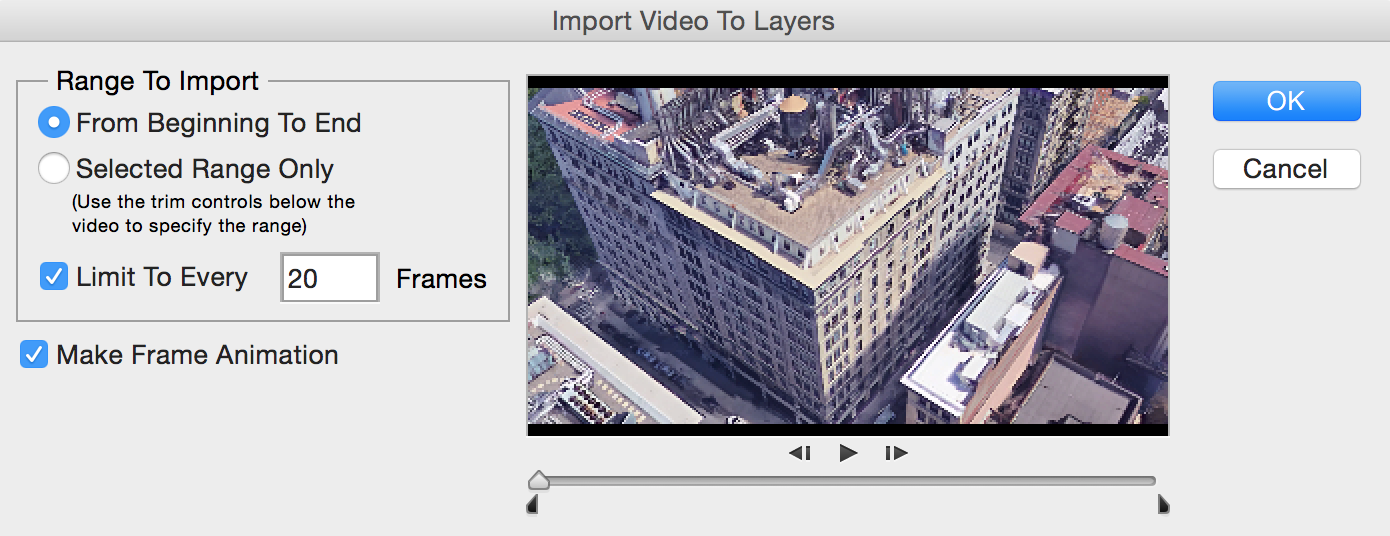

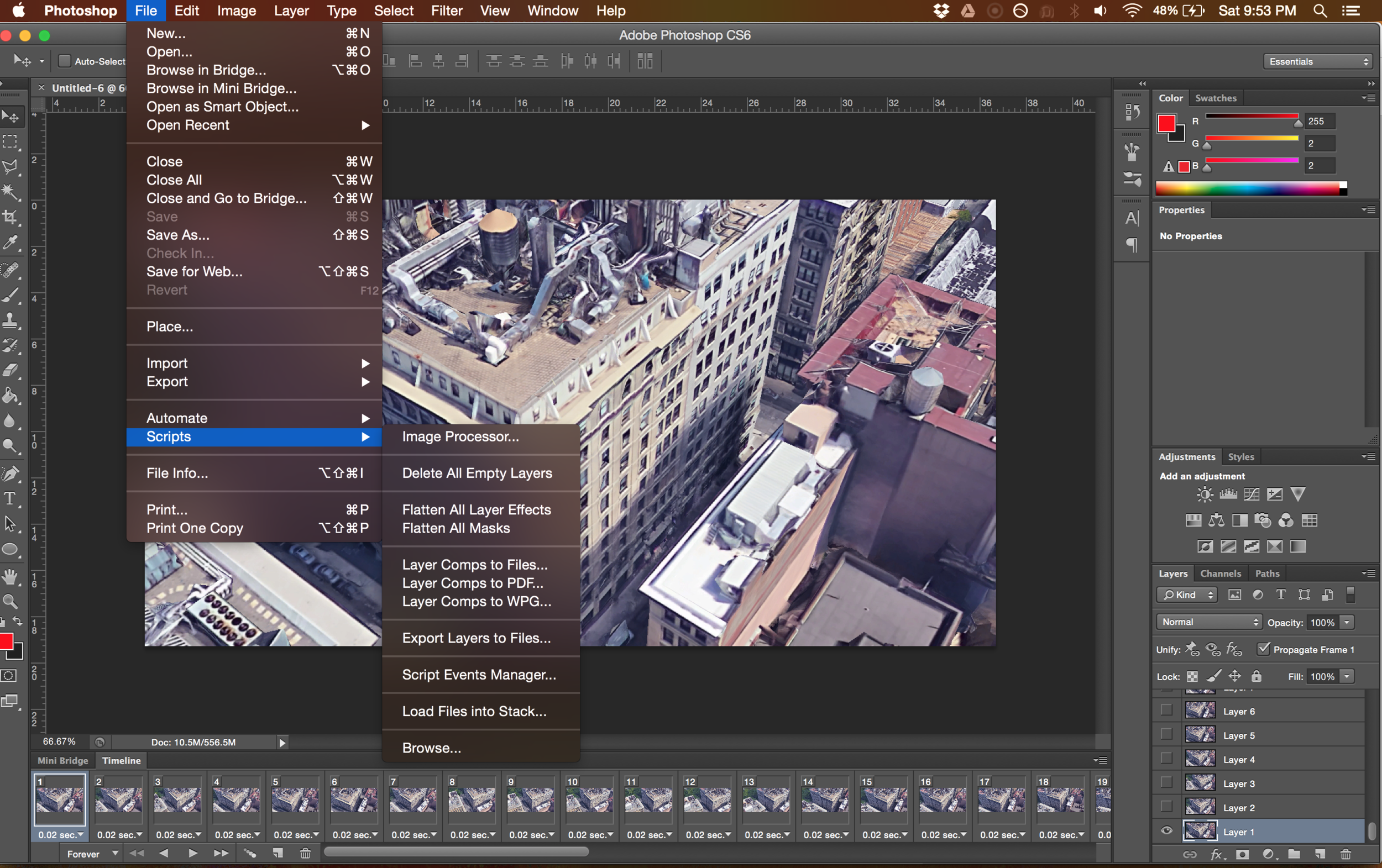

What do I need to achieve this?

- First learn how to use the wikipedia API, find articles and extract the description out of them

- Store this search terms/pages in a server or a database for the future redirection.

- A chrome extension with basic new tab redirect

Some js program in the extension to decide when to redirect you to a new hint from the future, or full search.

Idea número dos

This would combine my class of Rest Of You with Crazy DanO as well as some pcomp madness.

The idea consists of utilizing galvanic skin response sensors to constantly monitor your internet experience. If the sensor detects a rise in arousal over a define threshold, it would send a signal to the chrome extension to record the value of arousal as well as a screen recording of the active tab.

Constant Galvanic Skin Response Measurment

If the reading goes past a threshold save screen capture to server. Also record reading value.

- The most complicated part for this project, based on my expertise, might be get the arduino constantly sending values to the chrome extension.

- I would also need access to the tabs and all websites

- A way to save images into a server or data base.